Intro

Yesterday I compared different JDK versions and OpenJ9 versus HotSpot on memory and speed. The memory part of the test was realistic if you ask me, an actual working Spring Boot application that served REST objects.

The speed/CPU test however was… lacking. Sorting some random arrays, just one specific test.

Today I decided to test OpenJ9 and HotSpot a bit more using an actual benchmark: SPECjvm2008.

SPECjvm2008

SPEC (Standard Performance Evaluation Corporation) has a couple of well defined benchmarks and tests, including an old JVM benchmark called SPECjvm2008. This is an elaborate benchmark testing things like compression, compiling, XML parsing and much more. I decided to download this and give it a spin versus OpenJ9 and HotSpot. This should be a much fairer comparison.

Initially I encountered some issues, some of the tests didn’t work against Java 8 and the tests wouldn’t even start against Java 9+. But eventually I got it working by excluding a couple of benchmarks with the following parameters:

java -jar SPECjvm2008.jar startup.helloworld startup.compiler.compiler startup.compress startup.crypto.aes startup.crypto.rsa startup.crypto.signverify startup.mpegaudio startup.scimark.fft startup.scimark.lu startup.scimark.monte_carlo startup.scimark.sor startup.scimark.sparse startup.serial startup.sunflow startup.xml.validation compiler.compiler compress crypto.aes crypto.rsa crypto.signverify derby mpegaudio scimark.fft.large scimark.lu.large scimark.sor.large scimark.sparse.large scimark.fft.small scimark.lu.small scimark.sor.small scimark.sparse.small scimark.monte_carlo serial sunflow xml.validationTesting

The Docker images used in these tests are both Java 8 with OpenJDK8, but one with HotSpot underneath, the other with OpenJ9:

- adoptopenjdk/openjdk8

- adoptopenjdk/openjdk8-openj9

Again I started the Docker image with a directory linked to the host containing the SPEC benchmark:

- Start Docker:

docker run -it -v /Projects/SPECjvm2008:/app/SPECjvm2008 adoptopenjdk/openjdk8-openj9 /bin/bash- Go to the correct directory:

cd /app/SPECjvm2008- Run the (working) tests:

java -Xmx600m -jar SPECjvm2008.jar startup.helloworld startup.compiler.compiler startup.compress startup.crypto.aes startup.crypto.rsa startup.crypto.signverify startup.mpegaudio startup.scimark.fft startup.scimark.lu startup.scimark.monte_carlo startup.scimark.sor startup.scimark.sparse startup.serial startup.sunflow startup.xml.validation compiler.compiler compress crypto.aes crypto.rsa crypto.signverify derby mpegaudio scimark.fft.large scimark.lu.large scimark.sor.large scimark.sparse.large scimark.fft.small scimark.lu.small scimark.sor.small scimark.sparse.small scimark.monte_carlo serial sunflow xml.validationResults

After waiting a long time for the benchmark to finish, I’ve got the following results:

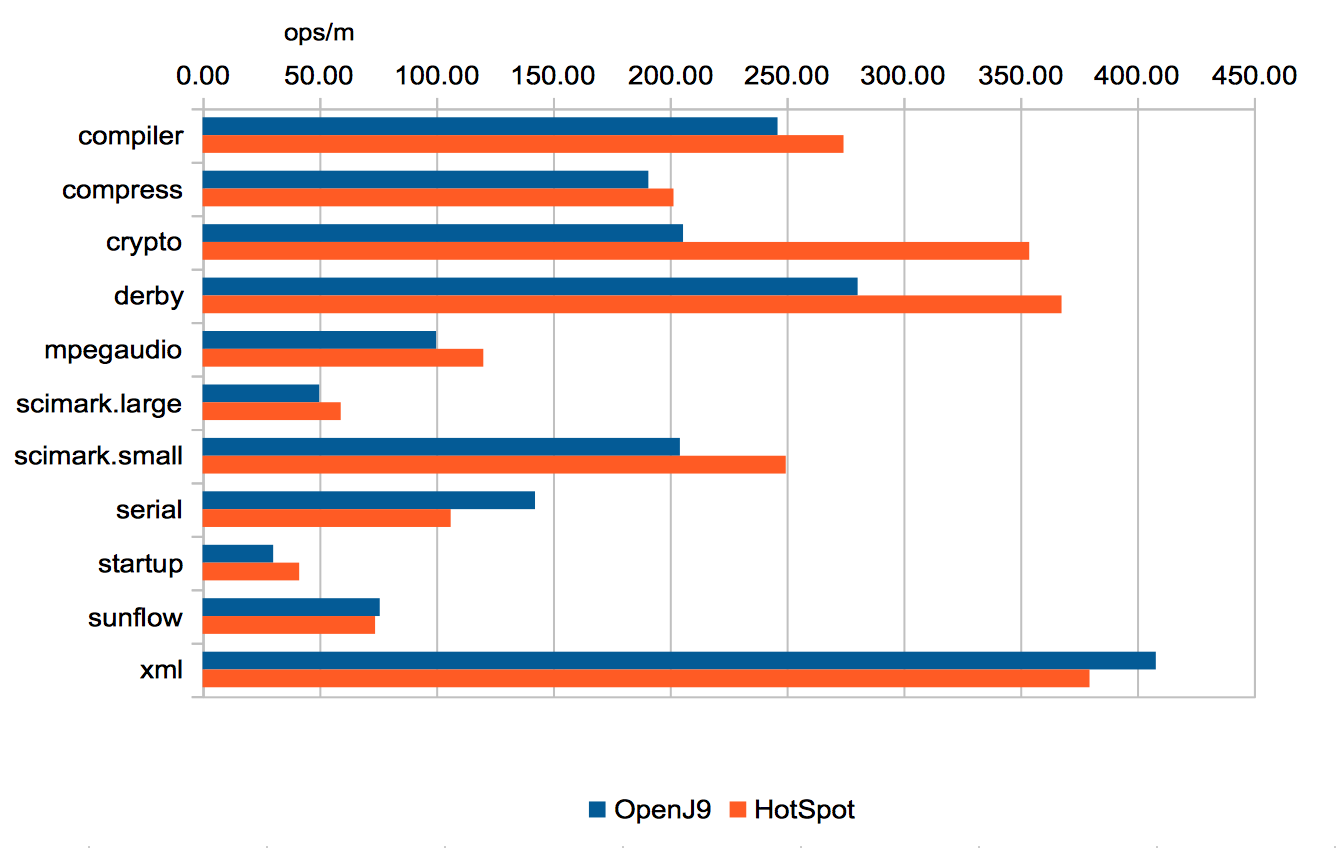

The graph is measured in ops/m, higher is better. Results may vary of course depending on hardware.

In most cases HotSpot is faster than OpenJ9, and in two cases HotSpot is much faster, crypto and derby. It appears this is a case where HotSpot is doing something special that J9 isn’t doing (yet?). This might be important to know if you’re working on applications that do a lot of cryptology, for example high performance secured endpoints.

One place where OpenJ9 came out on top is XML validation. Parsing/validation is also an important part in most modern applications, so this could be a case where J9 makes up some lost ground in actual production code.

Conclusion

Is there a real conclusion from this? I don’t think so.

The real lesson here is: Experiment, measure and you’ll know. Never decided anything based on some online benchmark.

If there is anything else you’d love me to test, send me a tweet: royvanrijn